So as I look to build my first dedicated media server, I’m curious about what OS options I have which will check all the boxes. I’m interested in Unraid, and if there’s a Linux distro that works especially well I’d be willing to check that out as well. I just want to make sure that whatever I pick, I can use qbittorrent, Proton, and get the Arr suite working

Debian with docker compose or podman.

Are there any resources available for how to do this? I feel like I more or less understand how Docker works conceptually, but every time I try to actually use it, I feel in over my head very quickly

Search for dockstarter and trash guides. It will give you the foundations of what you need

Thank you!

deleted by creator

The best thing is: if something doesn’t work, you tweak the compose file instead of having to retype or edit a command.

And you can have a GitHub of your compose files and any supporting config files.

I don’t get how some people can raw dog a docker run command!

Thank you!

That’s what I’m running. I’m sure you could squeeze more performance out of a specialized OS, but headless Debian is fast and easy enough.

This. Besides, stability beats out 2-5% performance gains any day of the week, for servers.

Debian!

Always Debian.

I’m very happy running lxc containers in proxmox

This has worked well for me too, for many years now!

I just recently discovered proxmox and am slowly moving my docker containers off my NAS. Picked up a used Intel NUC, i5-8259, 32gb ram, 512gb HDD. It’s been great so far, very happy with its ability paired with proxmox.

any specific reason why switch?

Performance mostly, encoding is better, reducing load on my NAS and using it specifically for storage. Immich performs better as well, it’s pretty resource hungry I found. I also am planning to set up Frigate for home security and that’s the main reason I wanted something with a bit more power.

I use Unraid and I’m loving it. Super stable, easy to manage, set up dockers, let’s me pool my hard drives and set up parity. Highly recommend. Only thing that I’ve had a hard time with is finding a stable flash drive - you’d be surprised how many start to fail when used 24/7

Came here to suggest unraid as well. There are probably better options, but for a first timer, I can’t imagine a better solution. The ability to just add a hard drive to the array with virtually not configuration, as well as adding up to two parity disks is great. Caching is super easy too.

Plus they now support zfs so there’s that.

Unraid would be a very good choice for someone who is reaching out and asking this question. Debian can do the same but I suspect it’ll be easier to setup and manage on unraid.

Disk management in unraid is also great.

The thumb drive isn’t used all the time. I’ve been using a cheap USB drive that cost me like $12 several years ago, and haven’t had any issues yet. It’s been running constantly for the last year or two.

I had an issue recently where my usb drive was “disconnecting” which triggered unraid to give read errors and then panicking. I had checked though and it wasn’t being regularly read or written to but still caused my whole server to crash. Changing usb drive has since fixed it, for now 😄

I have an overkill 128GB SanDisk flash drive I got for 13 dollars and it works great for my 24/7 unraid setup

I’ve heard smaller, older drives are actually more reliable in the long run. USB 2.0 especially because of the lower speed causes less heat

Using debian 12.

Easy, Linux. I prefer Arch based because of AUR.

I wouldn’t use Arch on a Server. Everything you install will probably be in a docker container anyway, so fast updates for system packages isn’t important compared to stability. Good choices would be Debian or Fedora Server. I personally use Fedora but the reason is just that I use Fedora on Desktop too, so I know they have really good defaults (They’re really fast in adopting new stuff like Wayland, Pipewire, BTRFS with encryption and so on) and it’s nice that Cockpit us preinstalled, so I can do a lot of stuff using a WebUI. Debian is probably more stable tho, with Fedora there is a chance that something could break (even though it’s still pretty small) but Devian really just works always. The downside is of course very outdated packages but, as I said, on a Server that doesn’t matter because Docker containers update independetly from the system.

Nah me neither, I had my desktop mindset going there. I use truenas scale, couldn’t be happier.

Now that Truenas Scale supports just plain Docker (and it’s running on Debian) I think it’s a great option for an all-in-one media box. I’ve had my complaints with Truenas over the years, but it’s done a really great job at preventing me from shooting myself in the foot when it comes to my data.

I believe raidz expansion is also now in stable (though still better to do a bit of planning for your pool before pulling the trigger).

The raidz stuff, as I understand it, seems pretty compelling. A setup where I can lose any given drive and replace it with no data loss would be very ideal. So I would just run TrueNAS scale, through which would manage my drives, and then install everything else in docker containers or something?

Yes, what you’re saying is the idea, and why I went with this setup.

I am running raidz2 on all my arrays, so I can pull any 2 disks from an array and my data is still there.

Currently I have 3 arrays of 8 disks each, organized into a single pool.

You can set similar up with any raid system, but so far Truenas has been rock solid and intuitive to me. My gripes are mostly around the (long) journey to “just Docker” for services. The parts of the UI / system that deals with storage seems to have a high focus on reliability / durability.

Latest version of Truenas supports Docker as “apps” where you can input all config through the UI. I prefer editing the config as yaml, so the only “app” I installed is Dockge. It lets me add Docker compose stacks, so I edit the compose files and run everything through Dockge. Useful as most arrs have example Docker compose files.

For hardware I went with just an off-the-shelf desktop motherboard, and a case with 8 hot swap bays. I also have an HBA expansion card connected via PCI, with two additional 8 bay enclosures on the backplane. You can start with what you need now (just the single case/drive bays), and expand later (raidz expansion makes this easier, since it’s now possible to add disks to an existing array).

If I was going to start over, I might consider a proper rack with a disk tray enclosure.

You do want a good amount of RAM for zfs.

For boot, I recommend a mirror at least two of the cheapest SSD you can find each in an enclosure connected via USB. Boot doesn’t need to be that fast. Do not use thumb drives unless you’re fine with replacing them every few months.

For docker services, I recommend a mirror of two reasonable size SSDs. Jellyfin/Plex in particular benefit from an SSD for loading metadata. And back up the entire services partition (dataset) to your pool regularly. If you don’t splurge for a mirror, at least do the backups. (Can you tell who previously had the single SSD running all of his services fail on him?)

For torrents I am considering a cache SSD that will simply exist for incoming, incomplete torrents. They will get moved to the pool upon completion. This reduces fragmentation in the pool, since ZFS cannot defragment. Currently I’m using the services mirror SSDs for that purpose. This is really a long-term concern. I’ve run my pool for almost 10 years now, and most of the time wrote incomplete torrents directly to the pool. Performance still seems fine.

I use Alma because RHEL is designed for enterprise stability. Debian is also a good option.

Just don’t use Ubuntu. They do too much invisible fuckery with the system that hinders use on a server. For basic desktop use it’s fine, but never for a server.

Edit: but you should be doing most stuff in Docker anyway, so the actual OS isn’t going to matter too much. If you’re already comfortable with one base (Debian, RHEL) just use that one or a derivative.

Just don’t use Ubuntu. They do too much invisible fuckery with the system that hinders use on a server.

Would that warning also apply to Mint, since it’s based on Ubuntu, as well as other Ubuntu-based distros?

I wouldn’t use Mint or other desktop-focused OS for a server. Ubuntu’s advantage of newer packages gets largely negated by how long Mint takes to release a new major release, so I’d rather use Debian.

I do think Ubuntu is fine for servers too, like almost any other point release distro.

Probably. I don’t know what Mint or others do under the hood, but I do know they’re definitely targeted at desktop use.

I assume any Linux or *BSD distro will work, especially one with Docker (which is most/all of them?) so you don’t have to worry about things being packaged for your distro so long as there’s a docker image. My server is Alpine Linux.

I use Alpine Linux for server-based stuff because it’s so light and the packages are kept up-to-date.

Like others in here, I also set mine up with Debian and docker compose. Since it’s an always on server I wanted maximum stability. I don’t use unRAID, so not sure about compatibility for that.

Data protection is a big concern. Is that something you have in your setup?

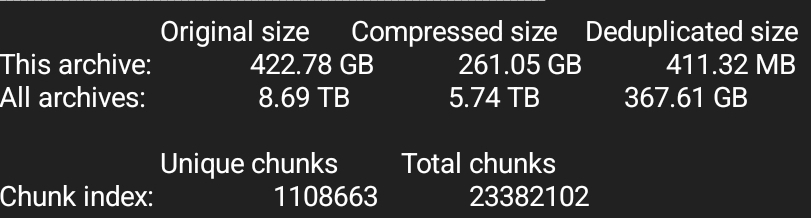

I run nightly archiving backups using Borg Backup.

It’s compression + de-duplication algorithms have me able to store 18 historical backups of about 422gb ea, in only 367gb of disk space.

That then gets mirrored to a cold storage drive manually every few months.

Ooh so I could do this to my media library?

If you want and have somewhere to store it.

I’m not all that concerned about the media drives; I don’t have a spare 30tb to stuff that backup in, and that can be re-acquired if push comes to shove. I tend to just backup metadata + server config/database files along with everything in /home, /root, and /var.

Unfortunately not in my setup, but that’s just because I don’t have the money to upgrade it at the moment and nearly everything I have is stuff I can easily redownload.

Once I can save up for it I will up my storage and get some back ups set up.

I have been fighting with Docker and Fedora on these exact items all weekend. Good luck

I use Unraid on my NAS. I like it for storage, I don’t like it for running services. It’s still running my media stack, but only until I get that moved to a Debian server.

Depending on how involved you want to be and what you want to learn, Unraid might be a good fit for you. It’s easy and mostly just works.

I second UNRAID, but also for your media stack. I have my home server running UNRAID and around 20 services, with zero issues.

How do Proton VPN and QBitTorrent play with that setup, if you know?

I’m sure any server oriented Linux distro will do fine. I use Debian.

I will note, I don’t know if you’re planning on having remote access (e.g. through tailscale or reverse proxy), but if you are, I found it quite a challenge to get proton to play nice with them

For newcomers I’d recommend docker and images like gluetun for setting up the VPN. It makes it easy to forward ports (for remote access) while keeping the torrent client behind the VPN.

I would also recommend it, and I even tried it when i started, but i just couldn’t get it to work. Probably permission issues

What did you end up using instead? It’s not a necessity, but remote monitoring and access has come in very handy in the past

For a while I split tunneled tailscale through an openvpn .conf file, but recently switched to using qbittorrent in docker with gluetun. Qbittorrent is realistically the only service that needs to be behind a vpn so it works out well

I dunno what the best is, but if you choose nixos configure openvpn instead of trying to use the protonvpn package.

Just wanted to add that Wireguard is better than OpenVPN in every way and you should use that except when you want to use it for torrenting. I don’t know remember the reason but that’s the one time when you should be using OpenVPN. I think it had something to do with OpenVPN supporting TCP and Wireguard being UDP only or something like that.

Wireguard uses UDP which results in better latency and power usage (e.g. mobile). This does not mean Wireguard can’t tunnel TCP packets, just like OpenVPN also supports tunneling UDP.

I’m using Wireguard succesfully for torrenting.

As a note: while UDP is preferable for stability/power usage, UDP VPN traffic is often blocked by corporate firewalls (work, public free wifi, etc) and won’t connect at all. I run OpenVPN using TCP on a standard port like 80/443/22/etc to get through this, disguised as any other TLS connection.

Good point. Setting up shadowsocks and tunneling wireguard through is on my to-do list. I believe ss also works over TCP so it should work reliably in filtered networks.

interesting. proton has example openvpn configs on their site which was hugely helpful to me. dunno if they have wireguard equivalents, or if those are needed.

I’d be weird if they didn’t have Wireguard configs, Wireguard is basically the standard nowadays. It’s faster and safer (the code base is way smaller, so the chance of there being security vulnerabilities is a lot lower and can be fixed more easily).

Looks like they do have both openvpn and wireguard configs. Is it true that for torrenting openvpn is preferred? That’s basically the only reason I use vpn.

I think so. The main reason I use OpenVPN for that is just that that’s what Gluetun uses. You should search that up online tho, I don’t really remember why OpenVPN is better.